LLMs aren't databases

People have built up intuitions for computers as we’ve lived with them over the decades. Our mental model is that they behave something like a database. We expect computers to record data for us precisely. Inaccuracy is usually attributed to the human system surrounding the computer.

This intuition is at odds with the reality of our new AI oracles. Large language models don’t store facts like databases. They are prone to hallucination (the tendency to make things up during generation). They contain ambiguity and uncertainty very much like the human systems surrounding them.

This post demonstrates this uncertainty and explains how it arises before discussing some implications to help revise your expectations. We conclude with recommendations to address hallucination by returning to the strengths of structured data.

LLMs store information in latent parameters

Large Language Models, like all neural networks, store information in so-called “hidden layers”. We may observe what goes in and what comes out but all of the connections in between are hidden inside in a black box.

Whereas statisticians model reality with equations informed by scientific understanding, machine learning engineers give models the data and let them decide how best to represent it. Statisticians focus their attention on parameter values fit by their models while machine learning engineers tend to focus only on the outputs.

These unobserved values are called “latent parameters” (i.e. secret/ potential/ dormant). Latent parameters aren’t directly interpretable like the facts and statistics that we’re used to.

A statistic like “the population of the UK” is identified by reference data which defines a geography: the UK, a date: 2021 and things like the measurement method: the Census. These reference data tie the fact to a broader context and are themselves directly interpretable.

The latent parameters in a language model only make sense within the space of the model. Individual values don’t have any objective meaning, they encode information only by reference to their distance from other values in the network. This doesn’t mean they can’t be used to store (and retrieve) facts, just that we can’t interpret them like we do records in a database.

Indeed this is at the heart of a long-standing competition between two approaches to artificial intelligence: connectionism vs computationalism. Where connectionist architectures store knowledge in networks of latent parameters, computationalist systems seek to formalise logical rules that can instruct an agent to act intelligently.

The connectionist approach is currently enjoying a resurgence with deep-learning networks at the forefront of advances in language modelling and computer vision etc. The computational approach has fallen from favour, it’s opponents bemoaning the complexity and fragility of formal logic!

As with any dichotomy, we should expect that a synthesis of the two approaches ought to be stronger than either alone. We’ll return to this idea later.

Latent parameters work probabilistically

We can demonstrate the probabilistic nature of how LLMs store facts by looking into the model’s own evaluation of it’s latent parameters and uncertainty around the text it generates.

We’ll look at the example above about the population of the UK. This is a readily understood fact that we might expect a large enough model would be able to store. We also have something of an objective truth in the Official estimate published by the UK’s Office for National Statistics.

We can test the black box by asking the model to complete the following sentence:

The population of the UK in millions is …

We do this by feeding the text into the model essentially “forcing it” to “say” the sentence then we look to see which tokens it “thinks” will come next and how probable it finds each choice. We can compare how likely it thinks that values like “50” or “60” might be to complete the sentence completions to establish a probability distribution.

I’ve prepared a small python script to extract the probabilities of each possible completion from 1 to 100. For the evaluation I used the Mistral 7B 0.1 weights. I then used an R script to visualise the results. You can find the code on Codeberg.

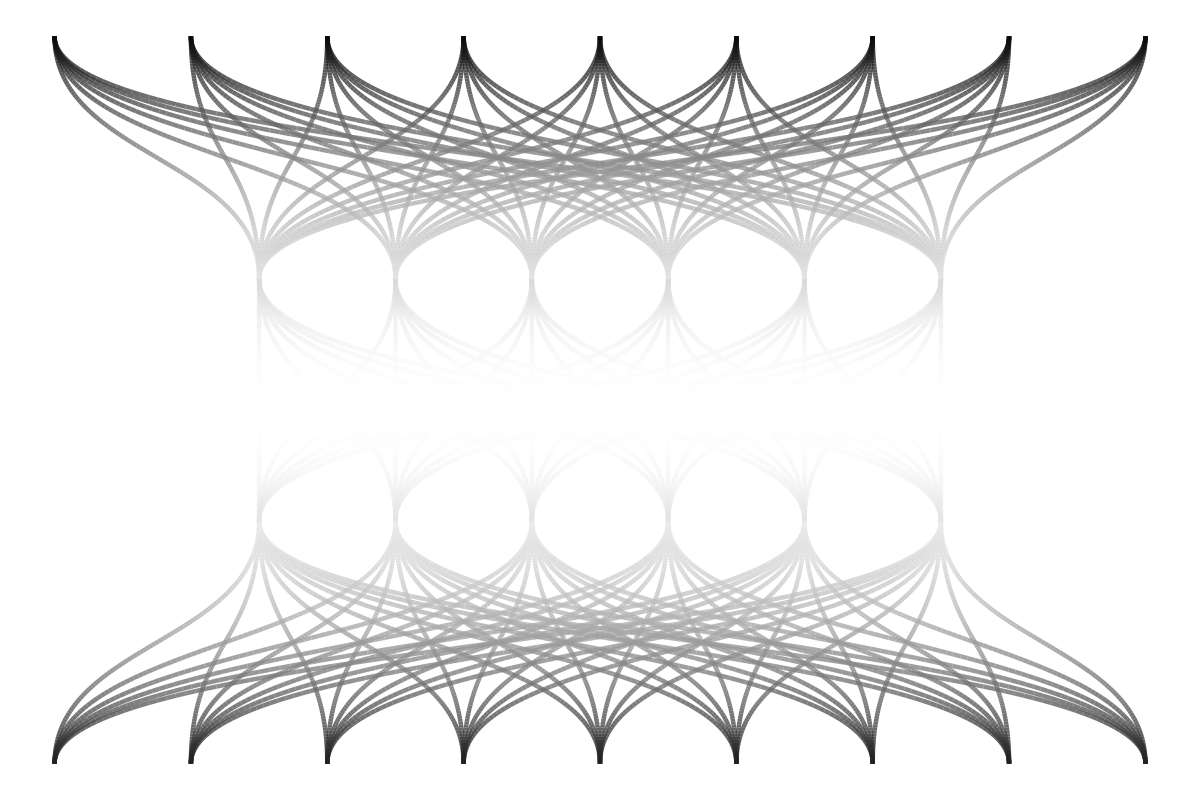

We can see from the peak on the chart that the single most likely completion is “64” (million), this is the model’s best guess of the UK population. It’s not a bad guess. The ONS estimates that the UK population was actually 67 million in mid-2021 (this is the latest estimate that was available at the time the Mistral model I used was released in 2023).

We can also see from the mountain range on the chart that it wasn’t the only value the model might consider a likely completion. Some of the probability mass is assigned to other possible values. If the model was 100% convinced in the 64 million value we’d see a sharp spike at this value and a flat line of zeros for all others. By contrast the model assigns a non-zero probability to alternatives like 63, 65, 66 and the “true” value 67. Most of the expectation is focused in the 60s but within this range the model is really quite uncertain.

This is completely reasonable. The population changes over time. Despite having worked with ONS statistics for 25 years and having quoted this value on countless occasions I still look it up every time to make sure. Indeed, I’ll argue that this is what the model ought to be doing.

Latent parameters make models hard to control

The implication of latent parameters is that hallucination is inevitable. Everything in the model is made up, it’s just some stuff is asymptotically correct. If a “fact” is repeated enough during training then it will stick. The human brain is fallible to the same deceit - repeat a lie often enough and it becomes the truth. Fortunately, for many practical use cases the models and training processes are enough.

Similarly we should expect that LLMs will always be vulnerable to jailbreaking prompts (“ignore previous instructions”) and that we won’t be able to guarantee safety within the model. We can’t point to the neurons responsible for certain thought patterns and switch them off. As in humans, electro-shock therapy doesn’t work because the brain isn’t compartmentalised like a filing cabinet. Memories are distributed throughout the cortex. Memory is fallible and subject to all sorts of bias. We can’t just instruct people to say the right thing. Our reality is subjective and our loyalties fickle.

That doesn’t mean its not worth trying to ameliorate the situation. Indeed guardrails and self-reflection are demonstrably effective. It’s just that we can only reliably control the system from the outside. In a sense system prompts designed to ensure safety are akin to client-side authentication or security by obscurity - better than nothing but fundamentally flawed. It may still be worthwhile investing efforts to interpret activation patterns within the network or devise training examples to improve safety as long as the illusion of control doesn’t lead to hubris.

Formal systems are built for control

There is a more robust solution available however.

As hinted above, one answer lies in a computational approach. We can have the model look up the official statistics just like I find myself doing. Rather than trying to keep all human knowledge in its “head” we can use traditional databases to keep a record of facts.

We can do this by having the model call tools (e.g. via the Model Context Protocol), for example an ONS API.

flowchart LR

User@{ icon: "eos-icons:application-window-outlined", label: "**User**"}

LLM@{ icon: "eos-icons:neural-network", label: "**LLM**"}

Tool@{ icon: "eos-icons:api", label: "**Tool**"}

User -- "_Question_" --> LLM

LLM -- "_Request_" --> Tool

Tool -- "_Response_" --> LLM

LLM -- "_Answer_" --> User

This has the promise to give us the best of both worlds.

The language model can handle the ambiguity of language and common sense - e.g. that if we ask “what is the population of the UK?” then we implicitly mean “today” or at least “what is the latest estimate?”.

Meanwhile the tool ensures the formal guarantees of hard facts - i.e. that the provenance is known and the values are agreed to be correct. Tools make any computational technique available, like logic programming (which is what we used to mean by “reasoning” before it referred to an LLM talking to itself) or automated theorem provers. Indeed it’s exciting to think that language models might make these exacting tools more accessible.

We can also use unstructured text data in a structured manner. With Retrieval Augmented Generation we can pull text from a database or search engine into the context window. This will still be represented with latent parameters but they can be grounded - i.e. taken from a recognised and reputable source of truth.

flowchart LR

User@{ icon: "eos-icons:application-window-outlined", label: "**User**", }

Retriever@{ icon: "eos-icons:database", label: "**Retriever**" }

LLM@{ icon: "eos-icons:neural-network", label: "**LLM**"}

User--"_Question_"-->Retriever

Retriever--"_Chunks_"-->LLM

User--"_Question_"-->LLM

LLM--"_Answer_"-->User

The computational approach gives us a means of control outside of the latent parameter space.

Just as we implement access control rules for databases, we can secure the tools available to language models to prevent them from ever reading prohibited or unsafe content in the first place. More broadly, we can ensure that any licensing or usage restrictions are followed because these relations are explicit and remain connected to the data and available for use by the system.

We also now have references so that we can cite sources and give credit or even pay royalties to the original content creators.

Language models aren’t like robots

Don’t expect language models to work like databases. They’re more like very eager and over-confident humans, that’s how they were trained after all.

They’re excellent at coping with ambiguity and integrating context but we should leave the hard cold facts to the databases.