Analyse backwards

A scientific approach to data promises to let us take decisions on the basis of empirical evidence. This ought to be objective and so more correct than managing our work according to opinions.

But how do we make sure we’ve got the right data? How do we ensure our analytical approach will enable us to take the right decision?

If the data is lacking then we’ll inevitably find ourselves falling back to opinions.

I believe the secret to data-driven decision making is to start at the end…

Starting at the end

You can let data drive your decisions by building your analysis backward from the conclusion to the data.

No, I don’t mean that you should forge data you need to give the result you want!

The idea is that you should avoid the natural temptation to launch into working forward from where you are with the data at hand. Instead you should approach the task from the end. Pull your analysis into being by working backwards from the results you need to deliver.

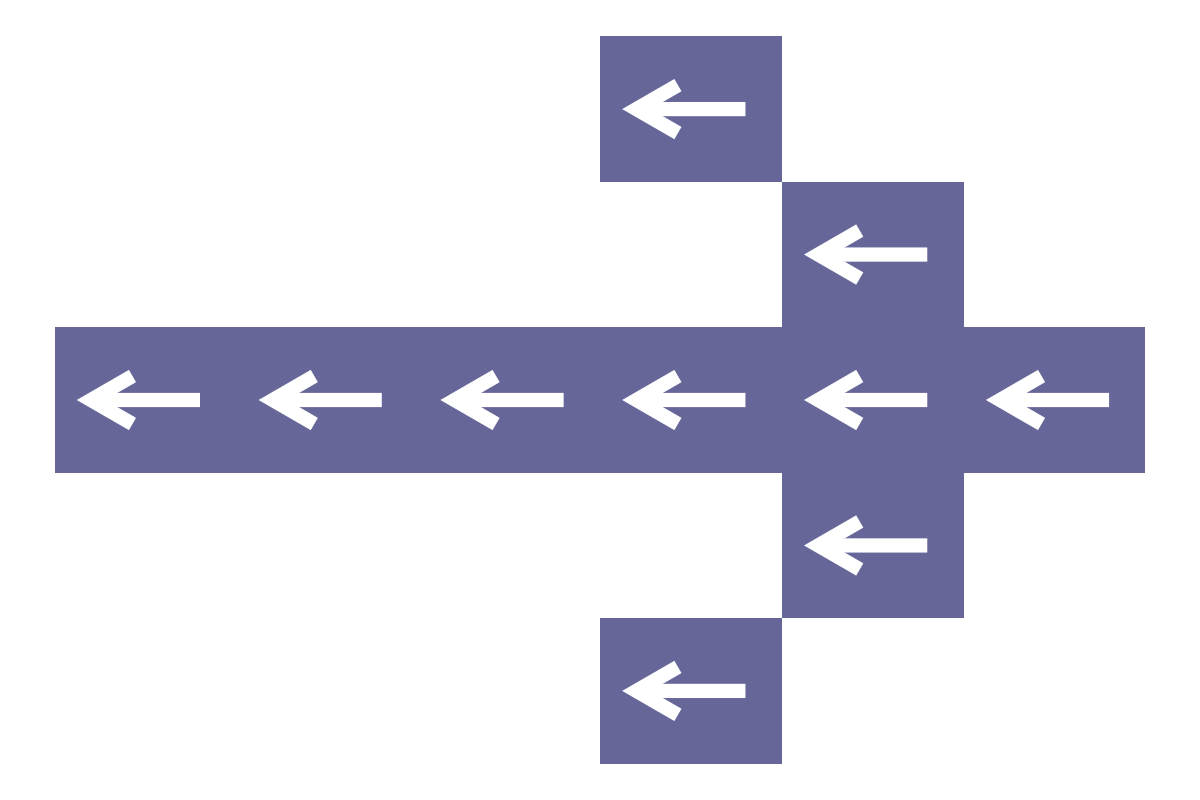

You should approach data analysis by working backwards along the chain of dependencies:

Data ← Model ← Metric ← Decision ← Purpose

Let’s review each step in turn, starting at the end…

Pursue a deep understanding of purpose

You should start by pursuing a deep understanding of the purpose of your analysis.

Stakeholders will typically specify data requests in terms of the results that are required. This is quite natural as it’s easier to explain requirements concretely by talking about solutions. Unfortunately this invariably leads to you building the wrong thing.

The difficulty is that a single idea for the solution is too brittle. A foregone conclusion like this misses the potential knowledge and experience of the data analyst. The prescribed solution might not be practical or worse still it might be misleading.

Instead it’s important to dwell in the “problem space”. This means the stakeholder and analyst can work together to specify a solution, leveraging the knowledge of both. In particular you need to identify the key research question.

Identify the research question

This is usually a decision that someone needs to take. You should dig thoroughly to ensure you’ve got as much critical context upfront as possible (reducing the risk of having to overhaul things later). You’ll know you’ve gone far enough when you can link the decision to money!

Be sure you know how the analysis relates to added-value in the overall process. Assertions like “our users need this data” may be true but too vague, you need to know why they need it.

The ultimate purpose ought to come from an external stakeholder (if not then you need to keep asking “why?”). As such you should avoid the temptation to translate the purpose into your own words. Using your stakeholders’ language will maintain alignment and make it easier for them to trust and accept your findings.

Define metrics to inform decisions

Once you have your research question - e.g. “which page design should we pick to sell the most widgets?” - you can design your own decision rule.

To take a data-driven decision you need to define a metric - e.g. “we’ll choose the design with the highest revenue”. It ought to be obvious how the metric relates to the purpose (i.e. sales are measured by revenue).

Whereas the purpose was defined by an external stakeholder, the discussion of metrics is where the analyst can bring their ideas to the table. Naturally, it’s important to reach a sense of shared ownership for the metric up front. If this is called into question later it will undermine the whole analysis.

It’s worth explore alternatives until you get some buy-in. It’s vanishingly rare to find a single metric that can provide a complete perspective. Explore the caveats to preempt the critique.

You don’t have to explain every alternative you considered along the way. Here’s a quote from Simon Peyton Jones’ excellent talk on How to write a great paper:

Do not recapitulate your personal journey of discovery. This route may be soaked with your blood, but that is not interesting to the reader.

Instead, choose the most direct route to the idea.

There doesn’t have to be a trade-off between different metrics. You can use a second metric to make up for problems with the first. You will need to have a way to decide how to proceed if the results are in conflict. How you do this depends on the context. You might decide that an inconclusive result means you need to gather more data or that you always have a default decision in the absence of overwhelming evidence.

Specify the model needed to calculate metrics

Now we’re finally ready to think about the data!

You need to figure out how to calculate the metric. The calculation itself may be relatively trivial - i.e. spreadsheet formula trivial - or it may be something more complex like a regression coefficient. In any case you need to be perfectly clear on what inputs are required.

Learn from simulations

Run some examples at this stage with simulated data. This will let you confirm that you know exactly what inputs are required.

It’s easier to run the analysis when you can just invent data in the shape you need rather than having to first manipulate the data you have only to find out you hadn’t understood the schema correctly. Don’t try to tackle too many unknowns at once!

Simulation will also help you to explore the modelling technique itself so you can better understand how it works and any limitations. Ensure you can recover the assumptions of your generated data in the parameters of the model.

It might help to start with examples from the documentation of the model you’re using then revise them until they better reflect the domain you’re working on.

Find and prepare the data for analysis

At this point we have a specification for the data that’s required for analysis. We now need to figure out how to derive that dataset.

You should now have a clear idea of what to look for in the sources available to you and the sorts of questions you need to ask about provenance.

It may be that the data you need doesn’t exist. You could instrument the system with monitoring or embark on primary research to gather the data you need. Having approached the research backwards you know exactly what questions you’ll need to include and can be sure any new data collection is fit for purpose. There won’t be any surprises later and you’re less likely to find yourself unable to draw the conclusions you need.

If you can’t collect new data now then you at least have what you need to form a business case to ask for it and to set out a roadmap for reaching this goal in future. You can defend your methodology and account for why you needed to fallback to alternate metrics.

Start doing the analysis

Congratulations: If you’ve got this far you’re ready to actually start working with the data and doing this analysis!

We now have a chain of logic from business value back to the data:

Purpose → Decision → Metric → Model → Data

Notice that having worked backward from the conclusions means we knew exactly what we needed at each stage. We kept a tight focus on our objective and were able to manage stakeholder expectations along the way.

Feedback ensures focus

As an epilogue I’d like to reflect on the parallels between this approach and software development methods.

I started my career with project management approach called PRINCE2 (Projects In Controlled Environments) that predates (and possibly helped motivate) the Agile Manifesto. It suggests starting with a “product breakdown structure”. This is a hierarchical mapping of the requirements that breaks down the overall “composite product” into “component products”. A key insight is that you can invert this hierarchy and translate nouns into verbs to create a hierarchical mapping of tasks in a “work breakdown structure” complete with an ordered critical path.

While the product-based planning approach of PRINCE2 still feels relevant 30 years later the cumbersome management process that went with it has certainly gone out of fashion. PRINCE2 can seem quite bureaucratic and artifact-heavy (change controls, exception reports, risk logs) as if it were designed to defend managers and consultants.

The Agile Manifesto meanwhile promised to value “Individuals and interactions over processes and tools”. Instead of comprehensive upfront planning the focus is on ensuring adaptability. I think the key insight here is that software development is a exploratory process. Even the most thorough plans can’t substitute for the discoveries you make once you get to interact with software. Estimates are doomed to failure as the problem always gets bigger the closer you get.

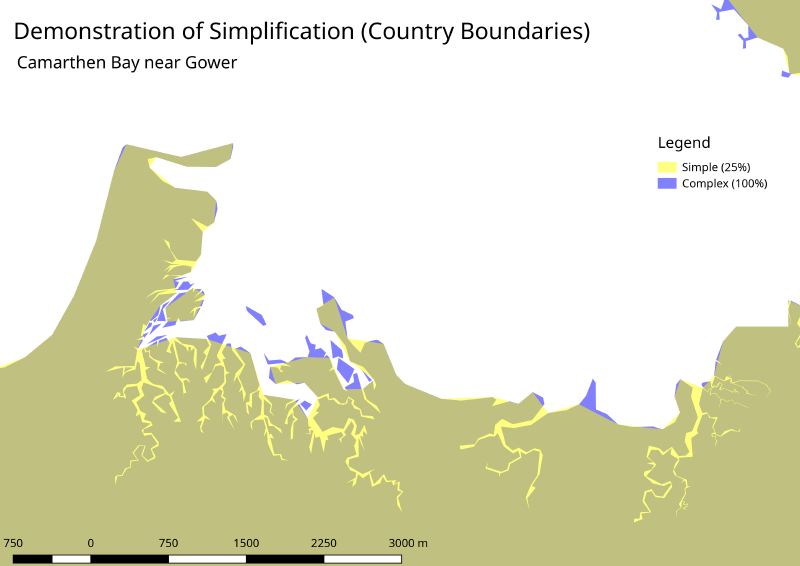

I prepared this demo when generating simplified boundaries for the ONS. When viewed from afar the coastline looks quite simple. Up close it's much more intricate. Paradoxically the coastline seems to get longer the closer you get. In fact the British coastline is about 1.21-dimensional!

This may seem at odds with what I’ve suggested above but I think the key concept common to all of these threads is that you can ensure that you’re focused on delivering value by building feedback into your process. You can figure out where you’re going by working backwards from the target and ensuring you have feedback every step of the way.

The extreme programming movement talks about test-driven design. We could think of this as decision-driven analysis.